untuk memulai Lab install kubernetes cluster, maka sebaiknya anda harus mengetahui konesp kubernetes terlebih dahulu yang sudah kami jelaskan pada artikel berikut ini Mengenal Kubernetes

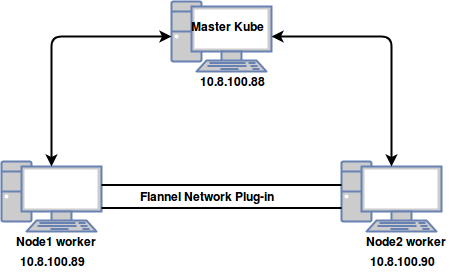

Topology Overview

Pada Master Node terdapat komponen seperti dibawah ini

- API Server

menyediakan API mengunakan JSON/ Yaml melalui protokol http, status dari objek API akan di simpan pada etcd

- Scheduler

merupakan sebuah program pada master node yang melakukan tugas penjadwalan seperti launching container di worker node berbasis ketersedian sumber daya pada worker

- Controller Manager

Tugas utama dari controller manager adalah untuk memonitoring replikasi controller dan membuat pod untuk mempertahankan keadaan yang diinginkan.

- etch

merupakan sebuah database key value pair. etcd berfungsi untuk menyimpan data konfigurasi dari kluster dan status kluster.

- Kubectl utility

merupakan sebuah tool command line yang digunakan untuk menghubungkan ke API Server pada port 6443, ini digunakan oleh administrator untuk membuat pod, service dan lain-lain melalui command line

Pada Worker Node terdapat komponen seperti dibawah ini:

- Kubelet

merupakan sebuah agen yang berjalan pada setiap worker node, kubelet akan terkoneksi ke docker dan bertugas untuk membuat, memulai dan menghapus container pada docker.

- Kube-Proxy

berfungsi untuk menentukan rute dari sebuah trafik sesuai dengan kontainer berdasarkan ip address dan nomor port dari datangnya permintaan. dengan kata lain kita dapat mengatakan itu merupakan port translasi seperti nat

- Pod

Pod dapat didefinisikan sebagai multi-tier atau kelompok kontainer yang digunakan pada worker node atau host docker.

Requirements Installation

- 3 mesin virtual

- masing-masing RAM => 4098 MB

- Disk => 40GB

Master Node Configuration

Setting hostname & ip address

|

1 2 |

hostname => master.kube.local ip address => 10.8.100.88/24 |

Disable SELinux & disable firewalld

|

1 2 3 4 5 6 7 8 |

[root@master ~]# exec bash [root@master ~]# setenforce 0 [root@master ~]# sed -i --follow-symlinks 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/sysconfig/selinux #disable firewalld [root@master ~]# systemctl disable firewalld [root@master ~]# systemctl stop firewalld [root@master ~]# systemctl status firewalld |

Setting bridge value pada sysctl.conf

|

1 2 3 |

[root@master ~]# vi /etc/sysctl.conf net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 |

aktifkan sysctl

|

1 2 3 |

[root@master ~]# sysctl -p sysctl: cannot stat /proc/sys/net/bridge/bridge-nf-call-ip6tables: No such file or directory sysctl: cannot stat /proc/sys/net/bridge/bridge-nf-call-iptables: No such file or directory |

jika ada error seperti diatas maka bisa dilakukan dengan cara seperti ini

|

1 2 3 4 |

[root@master ~]# modprobe br_netfilter [root@master ~]# sysctl -p net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 |

disable swap pada fstab

|

1 2 3 4 |

[root@master ~]# vi /etc/fstab /dev/mapper/centos-root / xfs defaults 0 0 UUID=f522e8d8-3430-4f1f-90e5-f580813b121f /boot xfs defaults 0 0 #/dev/mapper/centos-swap swap swap defaults 0 0 |

restart node master untuk reload selinux, setelah itu tambahkan pada hosts

|

1 2 3 4 |

[root@master ~]# vi /etc/hosts 10.8.100.88 master.kube.local 10.8.100.89 node1.kube.local 10.8.100.90 node2.kube.local |

Setting repository

|

1 2 3 4 5 6 7 8 9 10 |

[root@master ~]# vi /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg |

Install Kubedm dan Docker

|

1 2 3 |

[root@master ~]# yum install kubeadm docker -y [root@master ~]# systemctl restart docker && systemctl enable docker [root@master ~]# systemctl restart kubelet && systemctl enable kubelet |

inisialisasi kubernetes master dengan kubeadm init

|

1 |

[root@master ~]# kubeadm init |

output

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 |

[init] Using Kubernetes version: v1.10.3 [init] Using Authorization modes: [Node RBAC] [preflight] Running pre-flight checks. [WARNING FileExisting-crictl]: crictl not found in system path Suggestion: go get github.com/kubernetes-incubator/cri-tools/cmd/crictl [certificates] Generated ca certificate and key. [certificates] Generated apiserver certificate and key. [certificates] apiserver serving cert is signed for DNS names [master.kube.local kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 10.8.100.88] [certificates] Generated apiserver-kubelet-client certificate and key. [certificates] Generated etcd/ca certificate and key. [certificates] Generated etcd/server certificate and key. [certificates] etcd/server serving cert is signed for DNS names [localhost] and IPs [127.0.0.1] [certificates] Generated etcd/peer certificate and key. [certificates] etcd/peer serving cert is signed for DNS names [master.kube.local] and IPs [10.8.100.88] [certificates] Generated etcd/healthcheck-client certificate and key. [certificates] Generated apiserver-etcd-client certificate and key. [certificates] Generated sa key and public key. [certificates] Generated front-proxy-ca certificate and key. [certificates] Generated front-proxy-client certificate and key. [certificates] Valid certificates and keys now exist in "/etc/kubernetes/pki" [kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/admin.conf" [kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/kubelet.conf" [kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/controller-manager.conf" [kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/scheduler.conf" [controlplane] Wrote Static Pod manifest for component kube-apiserver to "/etc/kubernetes/manifests/kube-apiserver.yaml" [controlplane] Wrote Static Pod manifest for component kube-controller-manager to "/etc/kubernetes/manifests/kube-controller-manager.yaml" [controlplane] Wrote Static Pod manifest for component kube-scheduler to "/etc/kubernetes/manifests/kube-scheduler.yaml" [etcd] Wrote Static Pod manifest for a local etcd instance to "/etc/kubernetes/manifests/etcd.yaml" [init] Waiting for the kubelet to boot up the control plane as Static Pods from directory "/etc/kubernetes/manifests". [init] This might take a minute or longer if the control plane images have to be pulled. [apiclient] All control plane components are healthy after 83.504207 seconds [uploadconfig] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace [markmaster] Will mark node master.kube.local as master by adding a label and a taint [markmaster] Master master.kube.local tainted and labelled with key/value: node-role.kubernetes.io/master="" [bootstraptoken] Using token: pjizxk.b9hdimy6nyfpqf8p [bootstraptoken] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials [bootstraptoken] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token [bootstraptoken] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster [bootstraptoken] Creating the "cluster-info" ConfigMap in the "kube-public" namespace [addons] Applied essential addon: kube-dns [addons] Applied essential addon: kube-proxy Your Kubernetes master has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ You can now join any number of machines by running the following on each node as root: kubeadm join 10.8.100.88:6443 --token pjizxk.b9hdimy6nyfpqf8p --discovery-token-ca-cert-hash sha256:f7a77a76f6f83ebb8386401f31d52ce18af22121f3938a763b66ff4ea36d2362 |

sesuai dengan output successfull diatas maka kita harus membuat direktory .kube dan seterusnya

|

1 2 3 |

[root@master ~]# mkdir -p $HOME/.kube [root@master ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config [root@master ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config |

Worker Node

pada worker node tidak begitu berbeda dari master node,

Node1

|

1 2 |

hostname => node1.kube.local ip address => 10.8.100.89/24 |

Node2

|

1 2 |

hostname => node2.kube.local ip address => 10.8.100.90/24 |

Disable SELinux & disable firewalld pada node1 & node2

|

1 2 3 4 5 6 7 8 |

[root@node ~]# exec bash [root@node ~]# setenforce 0 [root@node ~]# sed -i --follow-symlinks 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/sysconfig/selinux #disable firewalld [root@node ~]# systemctl disable firewalld [root@node ~]# systemctl stop firewalld [root@node ~]# systemctl status firewalld |

Setting bridge value pada sysctl.conf pada node1 & node2

|

1 2 3 |

[root@node ~]# vi /etc/sysctl.conf net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 |

aktifkan sysctl pada node1 & node2

|

1 2 3 |

[root@node ~]# sysctl -p sysctl: cannot stat /proc/sys/net/bridge/bridge-nf-call-ip6tables: No such file or directory sysctl: cannot stat /proc/sys/net/bridge/bridge-nf-call-iptables: No such file or directory |

jika ada error seperti diatas maka bisa dilakukan dengan cara seperti ini

|

1 2 3 4 |

[root@node ~]# modprobe br_netfilter [root@node ~]# sysctl -p net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 |

disable swap pada fstab di node1 & node2

|

1 2 3 4 |

[root@node ~]# vi /etc/fstab /dev/mapper/centos-root / xfs defaults 0 0 UUID=f522e8d8-3430-4f1f-90e5-f580813b121f /boot xfs defaults 0 0 #/dev/mapper/centos-swap swap swap defaults 0 0 |

restart node node1 & node2 untuk reload selinux, setelah itu tambahkan pada hosts pada kedua node worker

|

1 2 3 4 |

[root@node ~]# vi /etc/hosts 10.8.100.88 master.kube.local 10.8.100.89 node1.kube.local 10.8.100.90 node2.kube.local |

Setting repository pada node1 & node2

|

1 2 3 4 5 6 7 8 9 10 |

[root@node ~]# vi /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg |

Install Kubedm dan Docker

|

1 2 3 4 5 6 |

[root@node1 ~]# yum install kubeadm docker -y [root@node2 ~]# yum install kubeadm docker -y [root@node1 ~]# systemctl restart docker && systemctl enable docker [root@node1 ~]# systemctl restart kubelet && systemctl enable kubelet [root@node2 ~]# systemctl restart docker && systemctl enable docker [root@node2 ~]# systemctl restart kubelet && systemctl enable kubelet |

kemudian join worker node ke master node

|

1 2 |

[root@node1 ~]# kubeadm join 10.8.100.88:6443 --token pjizxk.b9hdimy6nyfpqf8p --discovery-token-ca-cert-hash sha256:f7a77a76f6f83ebb8386401f31d52ce18af22121f3938a763b66ff4ea36d2362 [root@node2 ~]# kubeadm join 10.8.100.88:6443 --token pjizxk.b9hdimy6nyfpqf8p --discovery-token-ca-cert-hash sha256:f7a77a76f6f83ebb8386401f31d52ce18af22121f3938a763b66ff4ea36d2362 |

output

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

[preflight] Running pre-flight checks. [WARNING FileExisting-crictl]: crictl not found in system path Suggestion: go get github.com/kubernetes-incubator/cri-tools/cmd/crictl [discovery] Trying to connect to API Server "10.8.100.88:6443" [discovery] Created cluster-info discovery client, requesting info from "https://10.8.100.88:6443" [discovery] Requesting info from "https://10.8.100.88:6443" again to validate TLS against the pinned public key [discovery] Cluster info signature and contents are valid and TLS certificate validates against pinned roots, will use API Server "10.8.100.88:6443" [discovery] Successfully established connection with API Server "10.8.100.88:6443" This node has joined the cluster: * Certificate signing request was sent to master and a response was received. * The Kubelet was informed of the new secure connection details. Run 'kubectl get nodes' on the master to see this node join the cluster. |

kemudian get nodes pada master

|

1 2 3 4 5 |

[root@master ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION master.kube.local NotReady master 24m v1.10.3 node1.kube.local NotReady <none> 35s v1.10.3 node2.kube.local NotReady <none> 36s v1.10.3 |

ternyata hasilnya masih NotReady pada node worker, selain itu kita harus cek pods –all-namespace pada node master

|

1 2 3 4 5 6 7 8 9 10 |

[root@master ~]# kubectl get pods --all-namespaces NAMESPACE NAME READY STATUS RESTARTS AGE kube-system etcd-master.kube.local 1/1 Running 0 27m kube-system kube-apiserver-master.kube.local 1/1 Running 0 26m kube-system kube-controller-manager-master.kube.local 1/1 Running 0 27m kube-system kube-dns-86f4d74b45-vhvnv 0/3 Pending 0 27m kube-system kube-proxy-8lvfv 1/1 Running 0 4m kube-system kube-proxy-n8d8j 1/1 Running 0 4m kube-system kube-proxy-wnl8w 1/1 Running 0 27m kube-system kube-scheduler-master.kube.local 1/1 Running 0 27m |

ternyata pada kube-dns-86f4d74b45-vhvnv masih pending, ini disebabkan karena pod network cluster flannel belum terinstall.

Install Network pada node master

|

1 2 3 4 5 6 |

[root@master ~]# kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/v0.9.1/Documentation/kube-flannel.yml clusterrole.rbac.authorization.k8s.io "flannel" created clusterrolebinding.rbac.authorization.k8s.io "flannel" created serviceaccount "flannel" created configmap "kube-flannel-cfg" created daemonset.extensions "kube-flannel-ds" created |

status nodes sudah ready

|

1 2 3 4 5 |

[root@master ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION master.kube.local Ready master 39m v1.10.3 node1.kube.local Ready <none> 15m v1.10.3 node2.kube.local Ready <none> 15m v1.10.3 |

untuk instlalasi kubernetes sebenarnya sudah selesai, namun belum terdpat dashboard dan aplikasi yang running yang berjalan di atas kubernetes, mungkin untuk tutorial selanjutanya kami akan membahas tentang install dashboard dan management container diatas kubernestes, sekian terimakasih

refrensi:

www.linuxtechi.com/install-kubernetes-1-7-centos7-rhel7/

Komentar